In a world where it seems that just about every application interfaces with a number of other applications, integration and interoperability testing (IIT) is often not addressed adequately in a project. In this article, I deal with some of the issues and risks of testing highly integrated applications and will outline a test strategy for such applications.

In a world where it seems that just about every application interfaces with a number of other applications, integration and interoperability testing (IIT) is often not addressed adequately in a project. In this article, I deal with some of the issues and risks of testing highly integrated applications and will outline a test strategy for such applications.

You do not need to look far to see examples of software interoperability problems in the real world. Most organizations have a variety of hardware and software, often from a slate of several competing vendors whose products do work and play well together. You may be thinking that web-based applications enhance portability, and in some ways they do. However, web-based applications also introduce a new set of interoperability and compatibility concerns, such as browser compatibility and data interoperability, such as in the case of exchanging data via XML.

The Risks of Poor Integration and Interoperability

We've all felt the frustration of installing a software application on a PC and suddenly everything starts behaving very strangely ? all the way from minor annoyances to system hangs and crashes. In some ways it makes you wish that there were rigorous compatibility and interoperability standards that software and hardware manufacturers must follow. On the other hand, if there were such industry-wide standards and enforcement, we know our choices would be limited and the products on the market would be expensive. So, we are in a grand dilemma between reliability and flexibility in which other tradeoffs also abound.

Lack of reliability and flexibility are risks that are seen in diverse, highly integrated systems. It is up to the vendor to verify and validate applications to the extent the customer will not experience compatibility problems, however this is the rub. The possible number of computing platform combinations are endless and, therefore, impossible to test completely. In this article, we will examine the risks of such applications and some strategies to deal with testing a domain where there are no easy answers and no guarantees.

The risks often seen in integration and interoperability are:

- Loss of data

As data is passed between applications, there is a risk the data can be lost or misdirected.

- Poor performance

Bottlenecks can be inadvertently built in shared applications which results in poor performance.

- Unreliable operation

As software operates together on the same platform, a problem in one application can cause the entire system to crash.

- Incorrect operation

As data and control are passed between applications, are the results correct? Does the application operate as the user has been trained?

- Low maintainability

Although the goal of interoperability is to promote maintainability, that is not always the case. The application must be designed with maintainability in mind.

- Obsolete applications

If the application is built on any specific technology, there is a risk that two years from now that technology will be obsolete.

These risks can also be seen as the drivers for an IIT strategy. For example, to test the risk of data loss you would want to include passing data across defined interfaces and then using the data in other related applications.

Key Point #1 - IIT should not be confused with basic functional testing. The purpose of IIT is to test interfaces and interoperability. It must be assumed that other forms of testing such as functional and compatibility testing have already been performed.

First, we need to define some basic terms:

- Compatibility testing

Comparing an application against a set of established operating standards to determine operational compatibility.

- Configuration testing

Testing to validate that an application functions correctly on a particular computer configuration.

- Integration testing

Testing the points of integration between units and systems

- Interoperability testing

Testing with other applications that have met compliance standards; the ability of two or more systems or components to exchange information and to use the information that has been exchanged.

- Functional testing

Testing from an external perspective of what the application should do. Functional testing is performed from a "black box" perspective, with no knowledge of the inner workings of the application. Functional testing is cause/effect in nature.

Key Point #2 - There must be a way to build and maintain an integrated test environment (ITE) that closely resembles the production environment. Your test results are only as reliable as the environment in which you perform the test.

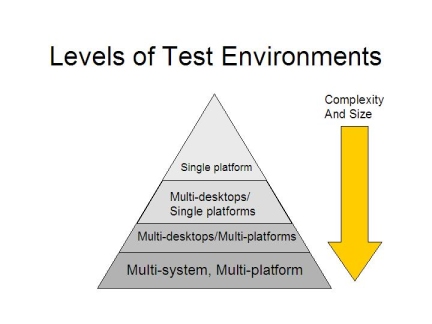

Figure 1 - Increasing Complexity of the Test Environment

The scope of IIT can be wide, including:

- Multi-units

These are the separate software units or segments that must work together correctly.

- Multi-systems

These are separate major systems or applications that must work together correctly.

- Spanned hardware

Software applications may reside on a variety of hardware platforms.

- Integrated hardware

Most hardware platforms have many compatibility issues with internal components.

- Disparate data sources and structures

Data does not come from universal sources and will be seen in many forms. Applications that work together must be able to accept and convert data to a usable form.

- Spanned geography

This introduces issues such as language and time zones, which add a new dimension to a project.

- Internal and external software sources

This speaks to the issue of control. We can control the test internal applications, but when we deal with other organizations, we must be able to communicate and get cooperation to achieve a total test.

Figure 2 - CM in IIT

With this wide scope of testing, there must be a way to control and co-ordinate this type of testing, which is where the co-process of configuration management (CM) comes into play. CM is the process of tracking all components of an application or system throughout its operational lifespan. CM is how the development, test, and production environments are controlled.

In Figure 2, a typical CM process is used to manage the integrated test environment and migrate applications to deployment. This picture shows an ongoing view of systems maintenance where units or components are checked out of a production source library. This is to prevent multiple people from working on the same component at the same time. As low-level tests are performed and passed in the development environment, they are migrated to the system test environment for integration testing. Finally, after integration and interoperability tests are passed, the application is deployed and the source code becomes the new production version. In the event of problems, the application is restored from the old version or fixed on the fly and reinstalled.

Key Point #3 - Communication is a primary success factor in performing IIT.

In other forms of testing, such as compatibility, configuration and basic functional testing, it is possible to perform the tests in relative isolation. True, you may need to speak with other people to get the job done, but not to the extent of coordinating tests between systems and organizations. In IIT, communication and coordination are paramount. If people aren't talking, then the test isn't going to be successful.

Organizations have learned an important lesson in two recent real-life technology efforts: Y2K and e-commerce. Both of these efforts required integration and interoperability testing to validate that organizations could send data and control through multiple points to achieve an overall successful completion. In e-commerce, we also learned that systems are sometimes closed loops back to the customer, with several trails to be followed (Figure 3). These types of integration needs have been present in the past as well as applications have had to handle ever-increasing levels of integration.

Figure 3 - E-commerce Integration with the Closed Loop Back to the Customer

This means that to test integration and interoperability you have to pick up the phone and call people to coordinate the effort. E-mail is great, but don't depend on it. In fact, never confuse an e-mail or a memo with effective communication. Effective communication requires both a sending and receiving party that is actively engaged in the conversation. This means sending the message clearly and listening intently!

The communication factor becomes especially important when the test spans time zones and multiple languages.

Test Strategies for IIT

We see that IIT is often complex and wide-ranging. In fact, most test (and development) processes fail on scalability when taken to the level of integration and interoperability. Casual test methods often fail to achieve the needed rigor and coverage. Rapid prototyping and other iterative methods are often focused at a single application level which minimizes IIT. So, what can be done to test effectively for IIT?

Key Point #4 - Understand that exhaustive testing is not possible in just about all software applications. This applies especially to IIT. Leverage the risk of deployment by limiting your exposure. Then, take action on the information learned from problems found in small scope deployments.

What this means is that systems that are highly integrated and disparate are by their very nature risky. It's like eating spicy Mexican food and then complaining about the heartburn. If you want to still eat spicy food, you'll need to find a way to deal with the heartburn. In highly integrated systems, we can mitigate some of the risk by the following strategies.

- Test Interfaces and Interoperability the Best You Can, Then Deploy to a Pilot Group

This is essentially a beta test, but there are some important considerations.

1. Beta testing has risks, such as the possibility of production defects, the low quality of feedback and the high risk of bad PR.

2. Limited deployment will not mitigate risk in all situations. There are some cases where limited deployment to a small audience is absolutely acceptable, such as when the risk is low to moderate. However, in high risk applications like medical devices, avionics, nuclear power controls, etc., a defect even in one site can cause catastrophic results!

- Build a Robust and Restorable IIT Environment and Perform IIT in That Environment

There are still risks due to the reality of non-exhaustive testing, but you may be able to perform a pilot or other form of limited deployment.

- Test Using Simulators

Of course, the problem with this is that a simulator is a contrived world. However, for initial tries simulators help reduce some risks. Just ask any drivers education teacher.

- Test Using a Live, but Segmented, Environment

This is often called an Integrated Test Environment. This technique has the risk of accidentally introducing test data into real operational processes, but the concept is that test data is processed right along with real data in production, but the test data is identified in such a way as to never mingle with production data. For example, test accounts may span from 600000000 to 699999999. Everything else is live data. The upside is that you can test transactions as they will flow in live processing ? just be careful not to do things like mail out bills with test addresses, etc. Hey, it happened during Y2K testing in some companies.

Conclusion

There is much more that can be said about this topic of integration and interoperability testing. We cover many of these topics in our new three-day course, Integration and Interoperability Testing. The main point I wish to convey is that the integration and interoperability aspects of systems should not be minimized.

As platform possibilities continue to increase and global business needs drive more interoperability between critical supply chain system, this topic will only get more important to testers. The integrated domain is inherently risky and there is a tension between interoperability standards and the freedom to innovate and deploy new technologies quickly.

I predict that IIT will be a key concern as global B2B application become reality and the sooner you develop your test strategies for IIT, the better.